Data News — Snowflake and Databricks summits

Data News #23.26 — Snowflake and Databricks summits wrap-up and a few fundraising.

Hey, since I said I should try to send the newsletter at a specific schedule I did not. Haha. Still here the newsletter for last week. This is a small wrap-up from the Snowflake and Databricks Data + AI summits which have taken place last week.

There are so many sessions at both summits that this is impossible to watch everything, more Databricks and Snowflake do not put in free access online everything so I can't wait everything. I'll try to recap the major announcements by reading between the lines and through social network posts.

If you want another view on both the conferences Ananth from Data Engineering Weekly wrote about the conferences extravaganza and a few trends he wanted to chat about.

Snowflake Summit ❄️

The marketing tagline of Snowflake have always been "the Data Cloud", with this year announcement we can feel it really accelerated to achieve this vision. Snowflake wants you to send whatever data on their cloud and then now you can use a lot of different features to do stuff on it. They announced:

- Document AI — A new integrated product where you can ask questions in natural language on documents (PDF, etc.). With LLMs they will try to answer questions. Once you are happy with the quality of answer you'll be able to publish the model and use it in SQL queries and write pipelines on top of it to infer on new documents and send emails when needed.

- Snowflake Native App framework — Via the Snowflake marketplace vendors and developers will be able to create apps that you can run on your data. In the UI you pick the tables you want the app to run on. Here the native apps marketplace, there are only 25 apps and it only works on AWS at the moment.

- Container Services & Nvidia partnership — Snowflake is slowly becoming a one-stop shop, with container services you will be able to run your own apps in a Kubernetes cluster managed by Snowflake. For instance tomorrow you'll be able to launch Airflow (via Astronomer) within Snowflake. On the same topic Nvidia partnership will bring GPUs to Snowflake offering for users in need of large compute for AI training. Thanks to this data do not move out of Snowflake, or if we say the truth, out of your underlying cloud.

- Dynamic Tables — Dynamic tables are streaming tables. With Snowflake you can send real time data coming from Kafka, for instance, with dynamic tables you can create a table on top of the real time data that refreshes in real time, using only what's needed to compute the new state. Dynamic tables has been announced last year, but looks finally in preview. In the demo there is also how the SQL UI integrates LLMs generating SQL from a comment.

PS: s/o to David who also covered Snowflake changes.

Data + AI Summit 🗻

The theme of the Databricks summit is Generation AI, it's a well found title regarding the current state of data. I watched the 3 keynotes to find announcements but it looks like less structured that Snowflake still here a few takeaways:

- Microsoft and Databricks are still best friends, even after Fabric. In a quick Skype call Satya Nadella, Microsoft CEO said that discussions about responsible AI while developing it is a good thing. We should explore 3 parallel tracks at the same time: misinformation, real world harms (incl. bias), AI takeoff.

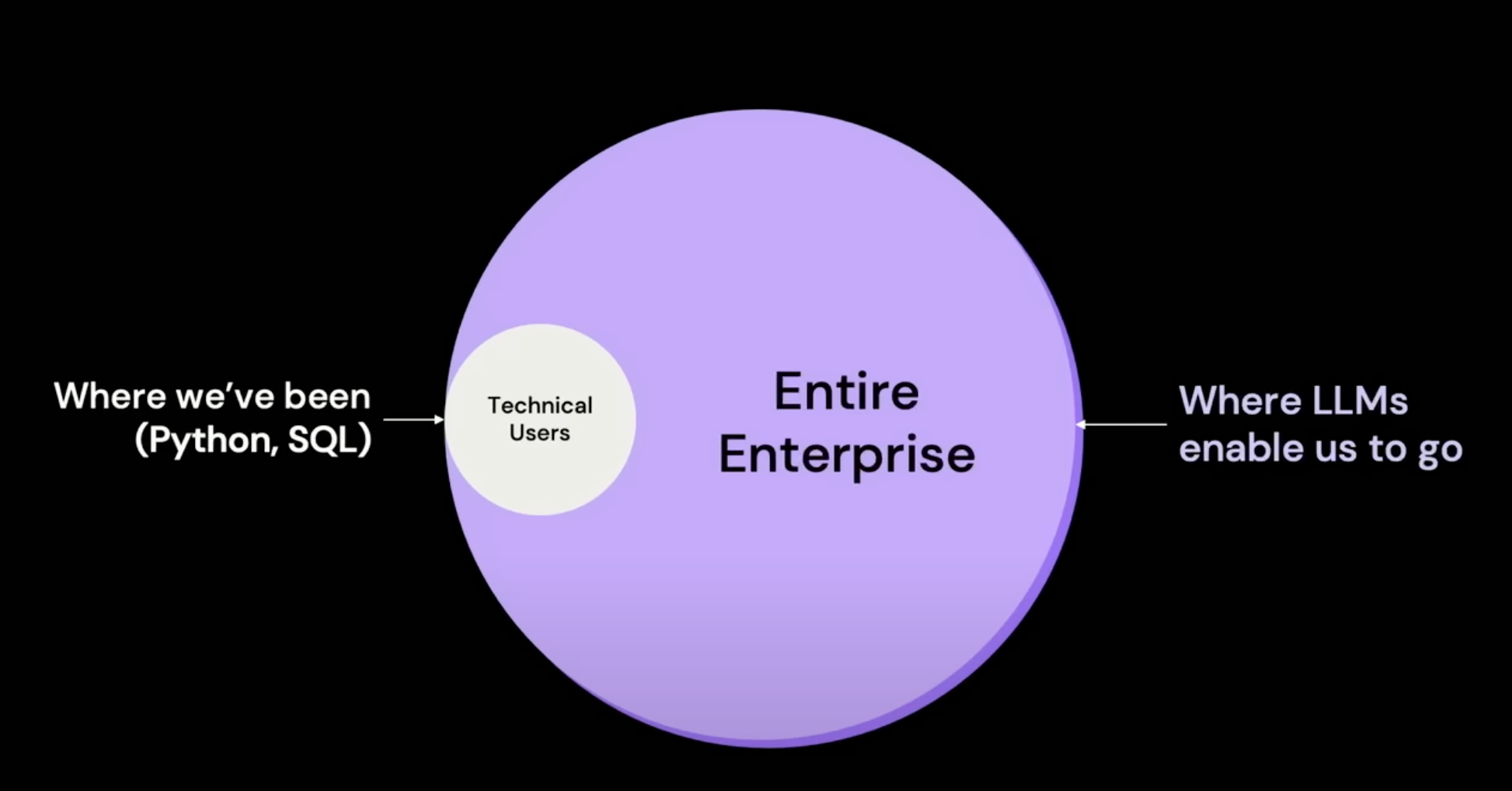

- The CEO of Databricks was on stage and use words that I like, he says

- data should be democratise to every employee

- AI should be democratise in every product

- LakehouseIQ — Matei Zaharia presented it on stage. LakehouseIQ is a way to use your Enterprise signals (org charts, lineage, docs, queries, catalog, etc.) to contextualise LLMs used in UI assistants. In the demo LakehouseIQ is asked to "get revenue for Europe" but understand that Europe is not the exact name of the region for this company but EMEA. Here a demo of LakehouseIQ. In the demo we also sees that you can generate SQL from a comment in the UI.

This is their way to democratise data to every employee. - Databricks acquires MosaicML for $1.3b— It should land in data economy category but you know. I've shared MosaicML last week because they are the ones behind the first open-source LLMs, the MPT models, on Apache License. This is a great move from Databricks to set themselves in the AI ecosystem for real. As a side note Naveen Rao, Mosaic CEO, said that to train MPT-30B from scratch you need around 12 days and less than $1m.

- LakehouseAI — Research shown that 25% of the queries get their costs misestimated by the query optimisers and the error can be 106. Databricks built a new way to do I/O with AI, they promise that you don't have to do any kind of indexes and the engine can "triangulate" where the data is to be faster than before. Mainly you have to see LakehouseAI like an AI DBA that does magical stuff to your engine by learning on all your queries telemetry.

- They also announced a lot of stuff around Spark.

As you can see Lakehouse is becoming more than ever a marketing brand around Databricks. In the end what we want is a place to store data and an engine to query data. That's all.

Data Economy 💰

- ThoughtSpot acquires Mode analytics for $200m — This is consolidation at work. ThoughtSpot is a company who tries to bring AI in the analytics domain. With TS you can define insights and access to it, with Mode they gain a end-user application that people are already using. Also you might know Mode through Benn Stancil blog.

- Hopsworks raises $6.5m — Hopsworks is a feature store.

- Inflection AI raises $1.3b from Bill Gates, Eric Schmidt, Microsoft and Nvidia. They developed a personal AI called Pi who's designed to be supportive, smart and here for you at anytime. Let's see where it goes.

See you soon ❤️.

blef.fr Newsletter

Join the newsletter to receive the latest updates in your inbox.