Data News — Week 22.47

Data News #22.47 — Advent of data 2022, how to build the data dream team, Postgres to DynamoDB, graphs and scaled data mesh.

Hello you, I hope this data news finds you well. Time flies to be honest.

I've launched in a rush an Advent of Data. The goal is simple, in December: 24 data people will produce 24 data gems. Every day a new piece of content will be release on a dedicated website. If you wanna join the initiative please reply, we are still looking for a few slots to be filled in. I know it's a late notice thing, but this is a good occasion to contribute to the data community.

How to build the data dream team

This Monday I've done my first ever presentation in a international meetup, in English. The experience was great and I enjoyed it, I hope people in the audience liked it also. A video should be out soon with the whole presentation but while waiting here a small glimpse of the talk.

In this presentation I tried to share ideas on how you can create a data dream team. This is more a presentation that is meant to be a collection of ideas and concepts you have to think about rather than a go-to solution. I'd also say that you should always avoid following blindly general advices because every time implementation depends. It always depends on so many things: the product, the resources you have, the company vision, the localisation, etc.

So yeah, right now the data market is pretty hot. A lot of companies are heavily looking for senior data engineers and analytics people—whether DA or AE—while layoffs are as high as the COVID period. In my opinion in order to create the data dream team you should understand you team creation funnel. Something like:

- Attract — you need to make people candidate or at least answer to acquisition managers

- Welcome — you never get a second chance to make a first impression, so pay attention to the first week

- Onboard — after the welcoming part you need to pay attention at the first 6 months

- Keep — this is as important as previous step in the funnel, you have to pay attention to keep people satisfied

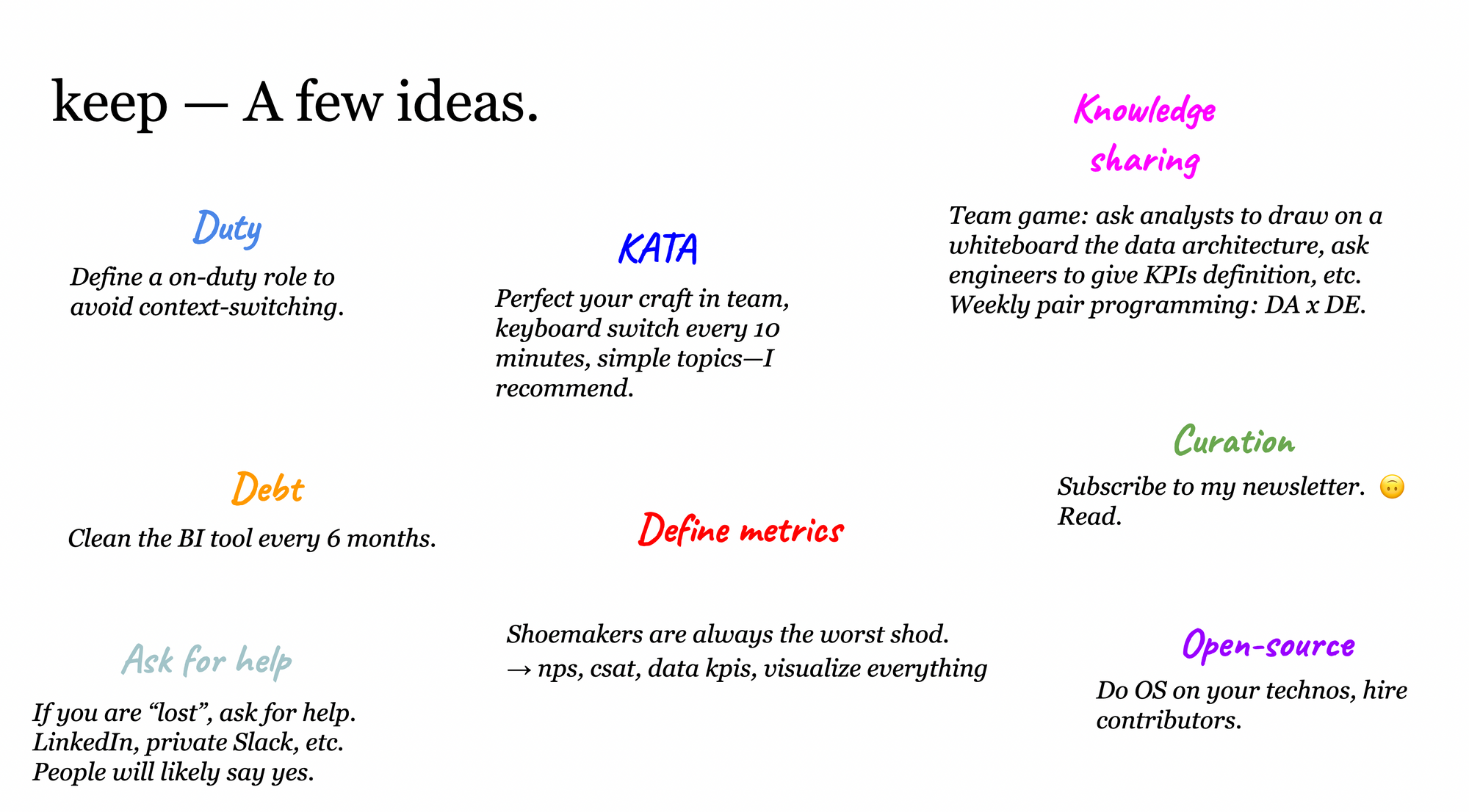

At the meetup I've especially detailed what you can do to keep people. You have to build the data dream team everyone wants to join and no one wants to leave.

To be honest this impossible to have everything done instantly this is more a long time game. I also think that there are 3 majors levers that are very important in the happiness of a team.

- You need to find the correct roles ratio. I mean, how many data engineers the team should have compared to scientists and analysts. In the past Jesse Anderson always advocated for 2-3 DE per DA/DS in simple team and 4-5 in more complex setup. I still think this is only a dream. As of today I believe the a good ratio would be DE / (DA + DS) > 1. This ratio management is only a frustration management. With simple words, the less engineers the more data people will be frustrated.

- Define the vision, the strategy and roadmap of the data team. Every data team go through an identity crisis at some point. A lot of data teams started by doing Shadow IT by saying yes to every data related project. But at some point it has to stop. Data team mission should be clear and understood by everyone.

- Last but not least, aim for no tech debt. Obviously this is easier said than done. But this is something that should be tackled early a in team because this is another topic that will lead for frustration. And frustration leads to resignation.

Finally I have a slide that I really like with strong opinion on topics that is meant to just make people think. Here below:

- Automate everything (IaC)

- Data engineers don’t write ETL

- Standards, a straight pipe is easier to fix than a curved one

- Data analysts know data better than everyone

- Do Python, don't do Java

- Real time is useless

- Describe every warehouse field

- SREs and software engineers are your best friends

- Who has 0 pipelines issues in the last 30 days?

- Who can’t answer to this question in less than 5s?

- Ask your DE to talk to stakeholders

- GDPR—no one does it, right?

This is in a nutshell my presentation. I'm curious to hear what you think about this. In the last slide of my presentation you have links to 10 articles that will help you for sure.

Fast News ⚡️

- In a data-led world, intuition still matters — The title says it all. I mainly think this is a reminder that data-driven decisions are good but as Alfred Sauvy said once "Numbers are fragile beings who, by dint of being tortured, end up confessing everything we want them to say" (thanks to Pierre 😉).

- Google has a secret new project that is teaching artificial intelligence to write and fix code — We are still waiting for self-autonomous car to really replace drivers, so lmao.

- From Postgres to Amazon DynamoDB — Another migration story. This time by Instacart who benchmarked DynamoDB to replace Postgres in their push notification system. In the article they detailed the data model adaptation they did.

- Versioning in analytics platforms — Petrica has a great sense when it comes to depict the analytical work. This time she shows at which step of the analytical work you can add versioning. She also showcases Nessie, a data catalog that works with incremental changes like git.

- Scaled data mesh — The author tries to enlighten the limitations every organisation will face with a mesh strategy. This is mainly a governance problem, but from companies already trying to implement it, I'd say that this is a point already identified.

- World's Simplest Data Pipeline? — "Data Engineering is very simple. It’s the business of moving data from one place to another." This is something I could have said. This is article is so simple, but so true. Few bullet points to check. Every data folk should read it before writing any pipeline.

- Retry pattern — When writting a pipeline you also have to think about error resolution. This resolution can by automated with few retry patterns. This is short and conceptual, but good points.

- Graph for fraud detection — Grab team explained how they used graphs to do fraud detection. Which is, by the way, one of the best way to handle fraud detection.

plane. Have fun reading this. It deeply details how the control plane works.

Data Fundraising 💰

- OneSchema raises $6.3m in Seed. OneSchema believe that even if we have awesome replacements CSV files will stay forever in the tech world. So they developped a suite of tools in order to help engineers to ingest CSVs. With their SDK you can add a drag-and-drop panel that will in the browser auto-detect your CSV and let the user fix the issue while you'll be able to validate the data before inserting it in the database.

See you next week with a new edition and the Advent of Data 🎄.

blef.fr Newsletter

Join the newsletter to receive the latest updates in your inbox.